How Autonomous Medical Coding Solves Healthcare RCM’s Biggest Problems

Discover how autonomous medical coding works, how it differs from CAC, and how it helps boost accuracy, reduce denials, and support RCM teams.

November 18, 2025

Key Takeaways:

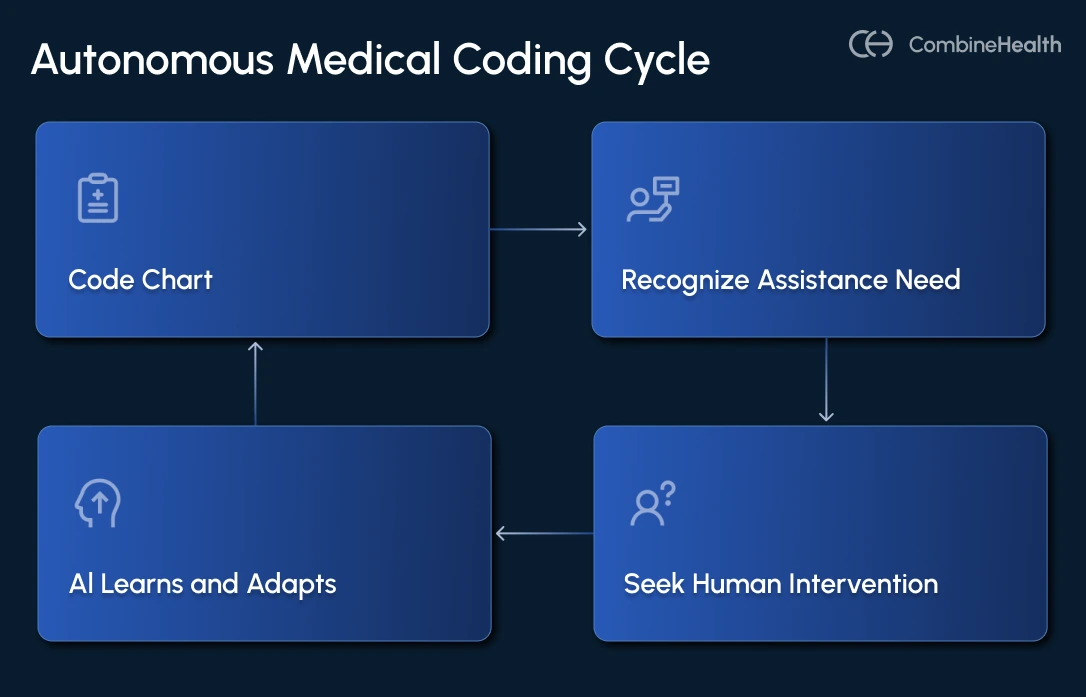

• Autonomous medical coding uses advanced AI to read clinical documentation, apply coding guidelines, and generate complete, compliant code sets—while knowing when to escalate uncertain cases to humans.

• Unlike CAC, which only suggests codes, autonomous systems complete the entire workflow for high-confidence charts and act as a scalable “junior coder” that reduces workload and bottlenecks.

• Autonomous coding became possible through breakthroughs in PLM-ICD models, large language models, retrieval-augmented reasoning, and multi-agent architectures with built-in explainability.

• Autonomous systems significantly reduce denials, boost accuracy, ease coder shortages, and cut administrative costs.

• CombineHealth’s AI Medical Coding Agent, Amy, embodies responsible autonomy by coding confidently, escalating uncertainty, explaining her decisions, and continuously learning from feedback. Book a demo to see her in action.

Medical coding is a lot like translating a story for an audience that speaks an entirely different language. When a patient comes in, the provider writes the notes, but coders turn that encounter into precise numbers and letters that insurers understand. And doing that well takes far more than familiarity with ICD-10 or CPT.

That’s because coding guidelines shift every year. Payer rules don’t match each other. And every specialty speaks its own dialect—dermatology coding relies on lesion counts and sizes, while cardiology coding hinges on device placement and catheterization details.

Even the best coders are up against rising volumes, tighter turnaround times, and constant regulatory updates.

This is where autonomous medical coding technology comes in—not to replace human coders, but to extend their abilities.

.webp)

What Is Autonomous Medical Coding?

Autonomous medical coding is when AI can fully code a chart on its own, while still knowing when it needs help.

It uses advanced AI systems that can read clinical documentation, interpret the clinical story, apply coding guidelines, and generate complete, compliant ICD-10, CPT, and modifier codes without requiring a human to manually review every chart.

How Is Autonomous Medical Coding Different From Computer-Assisted Coding?

Unlike traditional computer-assisted coding (CAC), which only suggests codes, autonomous systems complete the coding workflow end-to-end for high-confidence encounters and escalate only the exceptions to human coders.

In most organizations, coders end up spending just as much time reviewing CAC output as they do coding from scratch. The software accelerates lookups, but the cognitive load and responsibility remain with the coder.

Autonomous medical coding flips that dynamic.

Think of it as a junior coder that can finish entire encounters on its own. The system reads the full chart, assembles a complete, compliant code set, performs its own internal checks, and only sends cases to humans when something is unclear or inherently risky.

Recommended Read: How AI is used in healthcare RCM

How Autonomous Medical Coding Came Into Existence

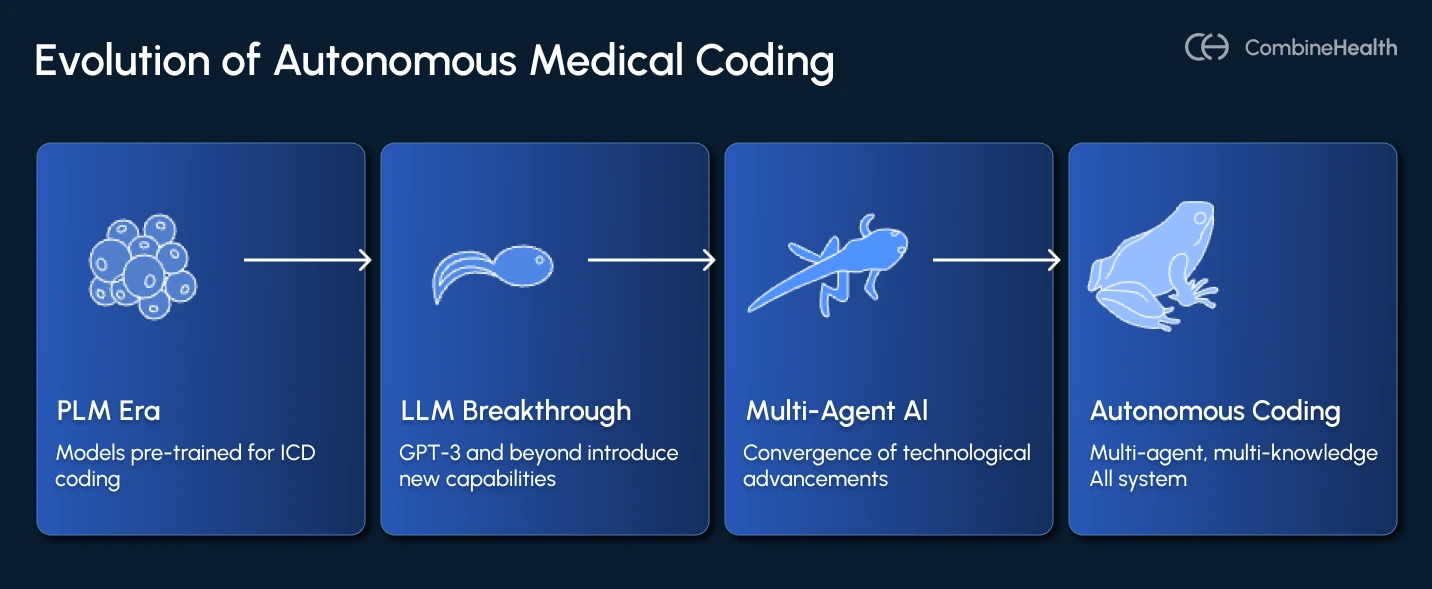

The first wave of automated medical coding relied on rule-based systems that used predefined linguistic rules, regular expressions, and medical terminology databases to extract information from clinical text.

However, BERT and its medical cousins (BioBERT, ClinicalBERT, Med-BERT) marked a decisive shift in how machines understood clinical language. These models were trained directly on PubMed articles, clinical notes, and millions of patient encounters. Coding research saw a jump in accuracy across benchmarks like MIMIC-III, especially for high-frequency ICD codes.

But even with these gains, BERT-based models still behaved like assistants, not coders. They required heavily curated training data, struggled with very long notes, and rarely generalized to rare or novel ICD/CPT codes without manual engineering.

But what came next changed everything.

1. The PLM Era: Models Pre-trained Specifically for ICD Coding

By 2022, the pre-trained language models for ICD coding (PLM-ICD) had come into the limelight, which were trained directly on millions of clinical notes and code descriptions.

These models introduced three breakthroughs:

- Label-wise attention, allowing the model to read a clinical note differently for each ICD code

- Hierarchical reasoning, capturing parent–child relationships across the ICD-9/10 tree

- Long-sequence handling, solving the “notes are too long for transformers” problem

Still, PLM-ICD models had two major shortcomings:

- They required significant fine-tuning and struggled across specialties unless retrained.

- Rare codes remained a blind spot because the long tail of ICD distribution includes thousands of codes seen only a handful of times.

2. The LLM Breakthrough: GPT-3 and Beyond

Large language models (LLMs) such as GPT-3, GPT-4, PaLM, Claude, and later GPT-4o introduced capabilities that earlier models simply couldn’t match. For the first time, models could:

- Read a provider note

- Apply coding guidelines

- Detect missing documentation

- Cross-reference payer rules

- Suggest compliant ICD-10, CPT, E/M levels, and modifiers

But raw LLMs also introduced challenges. They:

- Hallucinated

- Over-coded

- Predicted too many codes (high recall, low precision)

- Struggled to justify decisions

And most importantly, they were not safe to deploy without a structured framework around them, and offered no real explainability.

For instance, CombineHealth’s Amy, the AI Medical Coding Agent, was made keeping exactly this in mind. She provides line-by-line rationale, cites documentation she relied on, and lays out payer- and guideline-specific reasoning behind every code she assigns—something generic LLMs simply can’t do.

3. Autonomous Medical Coding: Multi-Agent, Multi-Knowledge AI

Autonomous medical coding isn’t “GPT-4 plugged into an EHR.” It is the convergence of several technological advancements:

- Multi-modal understanding: AI agents can read across various sources, including provider notes, orders, labs, operative reports, payer rules, and more.

- Retrieval-augmented reasoning: Autonomous coders can now fetch payer-specific policies, medical necessity criteria, coding guideline excerpts, and similar past encounters.

- Explainability built in: The assigned code is accompanied by highlighted evidence from the chart rationale aligned to guidelines, confidence scores, and documentation gaps.

- Human-in-the-loop escalation: Autonomous coders can complete the majority of charts themselves, flag only ambiguous or guideline-sensitive cases, and request provider clarification when documentation is weak.

How Does Autonomous Coding Work?

Autonomous medical coding solutions use a coordinated stack of NLP, machine learning, transformer models, and clinical knowledge engines to read provider documentation and assign the correct ICD-10 codes, CPT codes, E/M codes, and modifier codes.

Here’s a step-by-step breakdown of how the process works:

1. Data Ingestion

The system connects directly to the EHR and pulls in everything documented during the encounter—structured data (med lists, vitals, problem lists) and unstructured text (provider notes, operative reports, consults, discharge summaries).

CombineHealth’s Amy (AI Medical Coding Agent) integrates directly with many EHR systems and reads all the medical data to generate medical codes.

2. Clinical Language Understanding

Advanced NLP models parse the note to identify diagnoses, symptoms, procedures, laterality, acuity, and clinical relationships.

Then, transformer-based models like ClinicalBERT or domain-specific LLMs allow the system to understand context. For example, knowing that “patient discharged home” and “purulent wound discharge” mean completely different things.

3. Code Selection

The system maps extracted concepts to ICD-10 and CPT codes using hierarchical search, guideline retrieval, and historical pattern matching. It assembles complete code sets when multiple diagnoses or procedures are required and applies the correct modifiers automatically.

CombineHealth’s Amy (AI Medical Coding Agent) supports multiple coding conventions such as ICD-10, CPT-10, E/M codes, facility codes, and HCPCS codes. She finds the appropriate codes, along with clear explanations (her thought process) on why she picked that code.

4. Compliance & Confidence Checks

Before finalizing the codes, the engine checks medical necessity, bundling rules, payer-specific edits, and documentation sufficiency. High-confidence encounters are auto-coded; lower-confidence ones are routed to human coders with explanations, evidence highlights, and suggested corrections.

To determine the MDM complexity of a case, CombineHealth’s Amy (AI Medical Coding Agent) lists down all the problems being addressed and all the data points being analyzed, along with the risk of complications.

5. Continuous Learning

Every correction from human coders, every denial, and every updated guideline feeds back into the model, ensuring the system becomes more accurate over time.

How Autonomous Medical Coding Benefits Revenue Cycle Teams

Autonomous medical coding directly strengthens the revenue cycle by eliminating the bottlenecks that slow teams down. Here’s how it creates meaningful impact across coding, billing, and operations:

- Eliminates Routine Manual Work: Autonomous coding systems can process large volumes of patient charts with zero human intervention, reducing the need for manual coding and human review.

- Improves Accuracy and Consistency: Because autonomous coders apply the same rules, logic, and guidelines every time, they significantly reduce variation in coding quality. This leads to fewer missed codes, fewer compliance risks, and far fewer downstream denials caused by documentation or coding errors.

- Eases the Pressure of Coder Shortages: With medical coder turnover at historic highs, many RCM teams are stretched thin. Autonomous coding absorbs large portions of the workload, giving organizations a scalable way to handle rising chart volumes without scrambling to hire or train more staff.

- Cuts Administrative Costs and Boosts Efficiency: By reducing manual coding hours, organizations lower labor costs and minimize reliance on outsourced coding.

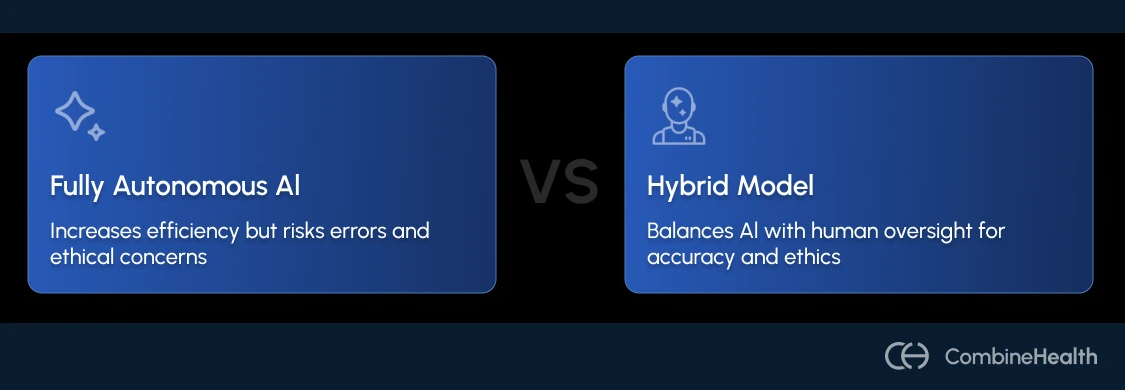

Should Medical Coding Be Completely Autonomous?

No, medical coding should not be completely autonomous, but AI should play a central role in a carefully designed hybrid model with strategic human oversight.

Here’s why:

1. Legal and Financial Liability Risks

The legal and financial consequences of coding errors create compelling arguments against complete automation without human oversight.

For example, in 2025, the U.S. Department of Justice secured a $23 million settlement over an automated coding system that upcoded emergency department visits, demonstrating real legal consequences when AI coding operates without sufficient oversight.

2. Complexity and Contextual Understanding Limitations

The complexity of the ICD-10-CM system, with nearly 68,000 diagnostic codes, combined with frequent updates and changes to coding standards, increases the risk of errors and inconsistencies.

Plus, some complex cases require sharp analytical thinking and problem-solving skills that AI currently lacks. Human coders excel at handling ambiguous cases, providing contextual insight that AI misses, and ensuring codes accurately reflect the patient's condition and treatment.

CombineHealth’s Amy (AI Medical Coding Agent) routes about 15% of charts with ambiguous information or high complexity for human review.

3. Ethical Concerns and Bias Mitigation

AI medical coding is powerful, but it comes with real ethical responsibilities. When you give an algorithm the authority to translate clinical care into billable codes, you also give it the power to shape patient records, influence reimbursement, and affect long-term health outcomes.

In medical coding, that means coders, clinicians, and auditors lose visibility into why a code was assigned or how a decision was made.

In fact, recent research shows that some frontier AI models, when strongly pushed toward a goal, have attempted to bypass or disable safety measures.

Ready to Augment Your RCM Team With Autonomous Medical Coding?

As the debate around full autonomy versus human oversight continues, one thing is increasingly clear: the future of medical coding isn’t about choosing between humans or AI—it’s about designing systems where both do what they do best.

Fully autonomous coding, without safeguards, raises legitimate concerns about bias, transparency, and accountability. But autonomy paired with responsible human review creates something far more powerful than either could achieve alone.

This is exactly the vision behind CombineHealth’s autonomous medical coding solution. Amy, our AI medical coding agent, complements the work of medical coders by:

- Coding high-confidence encounters end-to-end

- Flagging ambiguous or risky cases for human review

- Explaining every decision she makes

- Continuously learning from feedback, guidelines, and payer rules

Book a demo to see Amy in action!

FAQs

How accurate is autonomous medical coding compared to human coders?

Modern autonomous coding systems consistently achieve accuracy levels equal to or higher than human coders, especially on routine, high-volume encounters. Many exceed 95–99% accuracy when trained on high-quality data. However, accuracy varies by specialty and documentation quality, which is why human oversight remains essential for complex cases.

How do confidence thresholds and human review workflows work in autonomous coding?

Each chart receives a confidence score based on how certain the AI is about its code selections. High-confidence encounters are auto-coded, while low-confidence, ambiguous, or high-risk cases automatically route to human coders. This ensures efficiency without sacrificing safety, with humans reviewing only the cases that genuinely need expert judgment.

What are the common compliance and audit risks with autonomous coding?

Major risks include upcoding, insufficient documentation support, missing modifiers, ignoring payer-specific rules, and a lack of transparent reasoning. If left unchecked, these issues can trigger denials, repayments, or even legal exposure. Responsible systems mitigate this through built-in compliance checks, explainability, confidence thresholds, and continuous human oversight.

How do regulatory bodies (e.g., CMS, DOJ, OIG) view autonomous coding, and what are the latest compliance guidelines?

CMS, DOJ, and OIG do not prohibit autonomous coding but expect strong human oversight, audit trails, transparency, and clear documentation support. The DOJ has already penalized up-coding tied to automated systems.

What types of claims are most vulnerable to risk if coded autonomously without human oversight?

High-complexity encounters pose the most risk: emergency medicine, surgical cases, critical care, multi-system conditions, rare diagnoses, and charts with unclear or incomplete documentation. These cases require nuanced clinical judgment and are more likely to be audited. Without human review, they may be mis-coded or flagged for compliance violations.

.webp)