Explainability in AI for Healthcare RCM: A Smarter Approach to Decision-Making

Learn how explainable AI in healthcare RCM works, why it matters now, and how transparent automation helps reduce denials, risk, and rework.

January 1, 2026

Key Takeaways:

• Explainable AI is a financial, compliance, and risk requirement as denials, audits, and automation pressure rise.

• Unlike black-box AI, explainable systems show why a code, denial risk, or action was chosen, tied to documentation, payer rules, and guidelines.

• Explainability enables oversight at scale, helping RCM leaders prevent errors before submission instead of reacting after denials.

• Across coding, billing, payments, and appeals, real explainability means human-readable rationale—not just confidence scores or alerts.

• The right KPIs shift from volume to defensibility: higher touchless rates, fewer false alerts, stronger appeal wins, and lower audit risk.

A few years ago, AI in healthcare RCM felt experimental. Interesting, promising, but safely abstract.

Today, explainability in AI has quietly shifted from a technical discussion to a financial, legal, and operational necessity for RCM leaders. In fact, studies confirmed that Explainable AI (XAI) significantly increased clinician trust compared to "black box" standard AI. XAI was found to be critical for ensuring clinicians do not "over-trust" incorrect AI suggestions

Payers are deploying increasingly sophisticated algorithms to deny, downcode, and bundle claims. Regulators are scrutinizing automated decision-making more aggressively. And providers are discovering that when AI decisions can’t explain themselves, the liability doesn’t disappear—it lands squarely on their balance sheet.

At the same time, RCM teams are under pressure to automate faster than ever. Denials are rising. Staffing is tight. Generative AI is tempting teams with speed—sometimes at the cost of accuracy and compliance. In this environment, “black box” AI isn’t just unhelpful. It’s dangerous.

This is where Explainability in AI becomes the difference between automation that scales safely and automation that quietly compounds risk.

In this guide, we’ll unpack what AI explainability actually means in healthcare RCM, why it matters now more than ever, and how leaders can tell the difference between real transparency and vendor buzzwords.

What is AI Explainability in Healthcare?

AI explainability in healthcare, also known as Model explainability, refers to the ability of an AI system to clearly show how and why it arrived at a specific decision, using logic that humans can understand, review, and validate.

Many AI models, especially those based on neural networks and deep learning, are often considered 'black box' or 'black box models' because their inner workings are difficult to interpret—even for the engineers or data scientists who created them.

This black box nature makes it challenging to understand or explain what is happening inside these models. XAI (explainable AI) aims to counter this black box tendency of machine learning, providing transparency into the model's inner workings and making its decision-making process more understandable.

This means AI systems don’t just output a result like: “Code: 99215,” “High risk of denial,” “Appeal approved.”

It also explains:

- What data it used

- Which clinical or financial factors mattered most

- How payer rules, documentation, or guidelines influenced the decision

.webp)

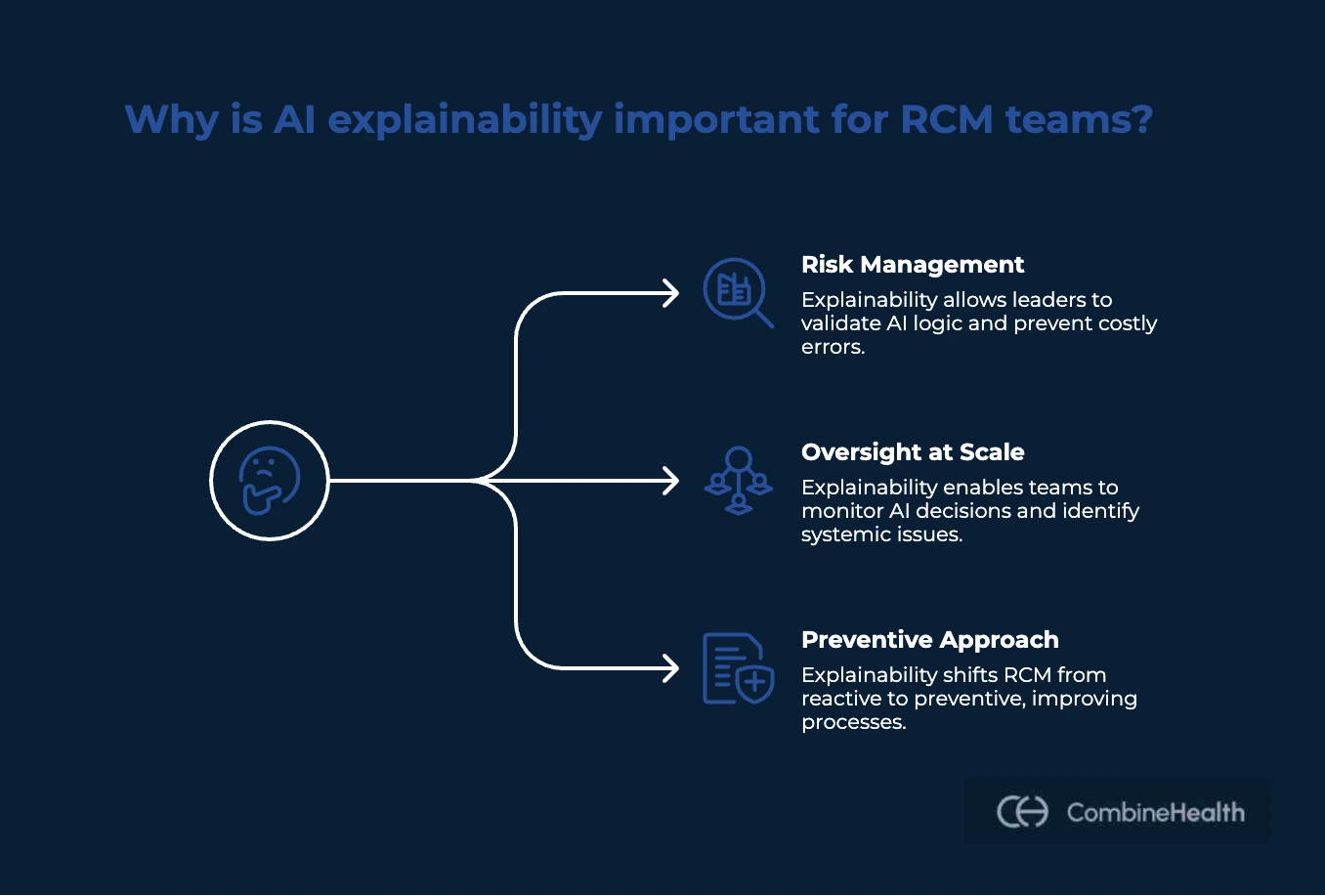

Why AI Explainability Matters Specifically for RCM Teams?

RCM teams sit at the intersection of finance, compliance, operations, and technology. When AI enters that equation, the stakes are different than in most other functions. Ongoing explainability research in AI is focused on helping organizations address compliance, legal, and ethical challenges, especially in high-stakes domains like healthcare. Here’s why it matters for RCM teams:

RCM Leaders Own the Risk, Even When AI Makes the Decision

In healthcare RCM, if an AI systematically upcodes, applies payer rules incorrectly, or submits claims with unsupported medical necessity, the provider is still liable, not the AI vendor.

Explainability gives RCM leaders a way to see and validate the logic before risk compounds. It answers questions like:

- Why was this E/M level selected?

- Which documentation elements supported it?

- Which payer rule was applied—and where did it come from?

AI Explainability Enables Oversight at Scale

RCM teams need AI systems to scale judgment without losing control. That's where explainable AI allows them to:

- Spot systemic issues (not just one-off errors)

- Identify when a model is drifting toward risky behavior

- Set confidence thresholds for automation safely

- Audit AI decisions without slowing down operations

Explainability in AI Shifts RCM From Reactive to Preventive

Explainable AI tells leaders why it will go wrong and what to fix before submission.

For RCM leaders, this changes how work is prioritized:

- From appeals after denial → to prevention before submission

- From probability scores → to root-cause correction

- From firefighting → to process improvement

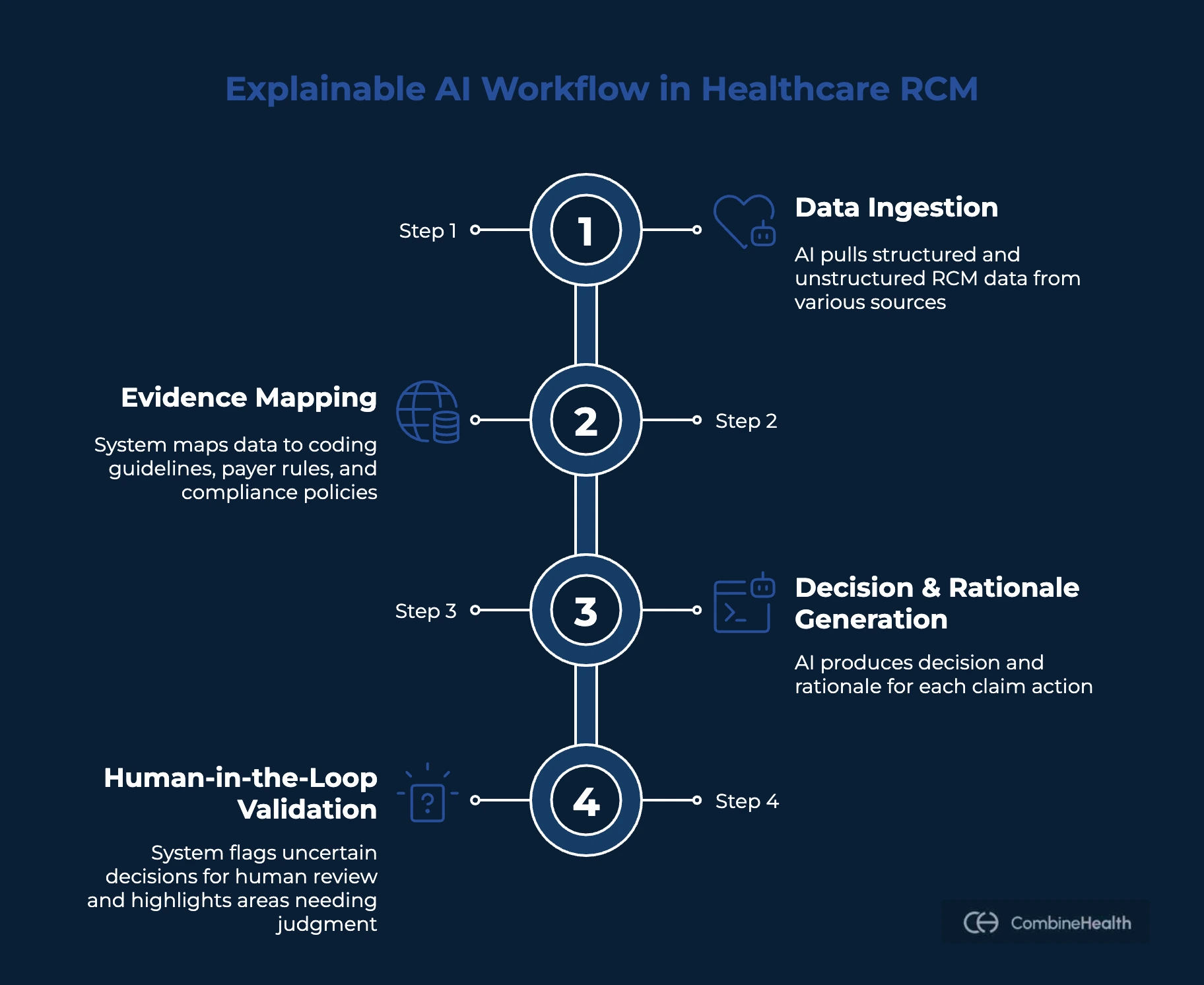

How Does Explainable AI in Healthcare RCM Work?

Explainable AI in healthcare RCM works by pairing advanced automation with a human-readable reasoning layer. Here’s how:

When an AI model processes a claim, it generates two outputs: the decision (such as whether to approve, deny, or flag a claim) and the rationale (the explanation for that decision).

The output created by the AI system includes both the decision and the explanation, ensuring that users understand not just what the AI decided, but why. This approach is designed to explain predictions made by the model, making the reasoning behind each outcome transparent and accessible.

Explainable AI techniques aim to provide information about how AI-based models make predictions, addressing the black-box nature of many machine learning algorithms.

Step 1: Ingesting Structured and Unstructured RCM Data

Explainable AI pulls from:

- Clinical documentation (patient encounters, provider’s notes, clinical assessments, operative reports)

- Medical codes (ICD-10, CPT, HCPCS, modifiers)

- Payer policies and medical necessity rules

- Historical denial and payment data

- Eligibility and benefit information

Step 2: Mapping Evidence to Rules and Guidelines

Next, the system evaluates the data against coding guidelines (E/M, ICD-10, CPT), payer-specific billing and medical necessity rules, and internal compliance policies.

This is where AI explainability begins.

Instead of applying rules invisibly, the explainable AI creates explicit mappings, such as:

- Which documentation elements support a code

- Which payer rule applies to a claim

- Which criteria are met and which are missing

Step 3: Generating a Decision and Its Rationale

For each action, the explainable AI produces two outputs:

- The decision: Code selection, denial risk assessment, appeal recommendation.

- The rationale: Supporting documentation excerpts, applied rules or guidelines, logical steps used to reach the conclusion.

Step 4: Human-in-the-Loop Validation

Explainable AI is designed to invite human oversight, not bypass it.

When confidence is high, the claims may move forward automatically.

But when uncertainty exists:

- The system flags the decision

- Highlights where judgment is required

- Shows exactly what needs review

How is Explainable AI Used in Healthcare RCM?

Explainable AI is applicable everywhere a decision affects reimbursement, compliance, or patient trust in healthcare RCM. In high-stakes environments like healthcare and finance, AI systems often rely on complex, opaque algorithms—such models can be difficult to interpret and trust, making explainability critical. Explainable AI is essential for building trust in AI systems used in these sectors.

Here’s how that plays out at each stage of the RCM lifecycle:

Clinical Documentation Integrity (CDI)

Explainable AI ties documentation suggestions directly to clinical evidence already in the chart, such as labs, imaging, or provider statements.

Instead of:

“Please specify acuity.”

Explainable AI shows:

“Creatinine levels and nephrology consult support acute kidney injury, but documentation lists ‘unspecified.’”

Medical Coding

Coding decisions are one of the high-risk areas in RCM.

Decision trees are an example of interpretable models often used in medical coding because they provide clear, understandable logic paths.

Explainable AI doesn’t just output a code; it also shows:

- Which documentation elements supported the code

- How MDM or time thresholds were met

- Which guideline or payer rule was applied

Feature Importance techniques can identify which input features most influence a coding prediction, helping medical coders understand and trust the AI's recommendations.

Example:

“CPT 99215 selected due to high-risk drug management and independent interpretation of imaging documented in the assessment.”

Claim Scrubbing & Pre-Submission Validation

Traditional claim scrubbers catch surface-level errors. Predictive AI systems add a denial score, but not a solution.

Explainable AI identifies:

- The specific rule that will trigger denial

- The exact missing or conflicting data

- The action required to fix it

- The feature attributions that clarify which specific data points or features led to a claim being flagged for denial

Explanatory techniques like Feature Importance, LIME, and SHAP are used to translate complex AI decisions into understandable insights for RCM teams.

Instead of:

“High risk of denial.”

Explainable AI says:

“MRI denied because conservative therapy duration does not meet payer policy for diagnosis X.”

Prior Authorization

Prior Authorization denials often happen due to incomplete or weak clinical justification.

Explainable AI maps:

- Payer policy requirements

- Directly to supporting phrases in provider notes

Patient Estimates & Financial Communication

Patients don’t trust numbers they can’t understand. Explainable AI breaks down:

- Deductible status

- Allowed amounts

- Plan-specific logic

Example:

“Your estimate is $600 because your deductible is unmet and your plan allows $600 for this service.” Documentation gaps, such as missing details, can also lead to increased costs or claim denials.

Interpretability vs Explainability in AI

Interpretability and explainability are often used interchangeably in AI discussions. But they're not the same thing, and the difference matters when financial accountability, audits, and compliance are involved.

Interpretability refers to how easy it is to understand how an AI model works internally.

In these systems, you can often see:

- The rules being applied

- The weight of each input

- The decision path from input to output

But it often stops there, as highly interpretable models usually sacrifice power and nuance. They may:

- Miss edge cases

- Fail to adapt across payers

- Oversimplify clinical context

Explainability focuses on something more practical: Why did the AI make this specific decision for this specific claim?

AI explainability provides:

- Claim-level rationale

- Evidence from documentation

- Payer or guideline references

- A traceable logic path

What Real AI Explainability Looks Like (Real Workflows by CombineHealth)

CombineHealth uses explainable AI anywhere there's an RCM decision being made. That means anytime our AI selects a code, prepares a claim, posts a payment, prioritizes follow-up, or drafts an appeal, it also produces a human-readable rationale that can be reviewed, validated, and defended.

Here’s how that shows up across three of the core RCM workflows:

Coding, Auditing, and CDI

When our AI Medical Coding Agent Amy assigns ICD-10, CPT, E/M, modifiers, or HCCs, it never outputs a code in isolation.

For every coding decision, we provide:

- The specific documentation evidence used (note sections, findings, interpretations)

- The guideline logic applied (E/M leveling criteria, payer-specific rules)

- The reason competing codes were excluded

- Clear flags when documentation gaps exist (e.g., missing independent interpretation, incomplete procedure detail)

This allows coders and auditors to validate why a code was selected—without rereading the entire chart. It also enables systematic audits, not just spot checks, because the rationale is consistent and repeatable across encounters.

Billing & Claim Creation

When our AI Medical Billing Agent Mark prepares claims, explainability follows the claim forward.

For every billing action, the system records:

- Which payer-specific SOPs were applied

- How codes and modifiers were validated for that payer

- Why a claim was marked ready for submission or held back

- Which historical denial patterns influenced pre-submission checks

Payment Posting & Reconciliation

When Mark reads ERAs or EOBs (including scanned PDFs), payment posting is never treated as a blind automation step.

For each reconciliation event, we surface:

- Expected vs. received amounts

- Contractual adjustment logic used

- Identified variances and write-offs

- The reason a balance was closed, escalated, or sent to A/R

This helps instantly address many downstream questions: Was this underpaid? Was this contractual? Should this be appealed?

What Are the Latest Advancements in Explainable AI?

Explainable AI (XAI) has evolved quickly in response to growing model complexity.

Below are the most important advancements shaping explainable AI today:

More Mature Post-Hoc Explanation Techniques

Post-hoc methods explain individual decisions without changing the underlying model. Recent improvements have made these explanations more reliable and actionable.

Key techniques include:

- SHAP (Shapley Additive Explanations): Breaks down how each input contributed to a specific prediction

- LIME: Provides localized, human-readable explanations around a single decision

- Counterfactual explanations: Show what would need to change to reach a different outcome

Shift Toward Inherently Explainable Models

Rather than explaining complex models after the fact, newer approaches aim to build explainability into the model itself.

Examples include:

- Sparse neural networks with fewer, more meaningful signals

- Attention-based architectures that surface what the model focused on

- Self-explaining neural networks that output both predictions and reasoning

Attention Mechanisms as a Transparency Layer

Attention mechanisms (widely used in language models) are increasingly applied to explanations.

They help show:

- Which parts of a clinical note influenced a decision

- Which policy sections mattered most

- Which documentation gaps triggered risk

Causal Reasoning Over Pure Correlation

A major advancement in XAI is the move from correlation to cause-and-effect reasoning.

Causal approaches answer:

- What specifically caused this outcome?

- What change would have prevented it?

Human-Centered and Interactive Explanations

Modern XAI systems are designed around how people actually consume explanations.

They increasingly include:

- Role-specific explanations (coder vs. CFO vs. compliance)

- Interactive views that allow “what-if” exploration

- Confidence and uncertainty indicators alongside recommendations

Why Explainable AI and Governance Go Hand in Hand

Coding decisions, denial outcomes, and documentation gaps don’t just affect margins—they carry compliance risk, audit exposure, and downstream operational consequences.

Explainable AI provides the why behind each output—linking decisions back to clinical documentation, payer rules, and guidelines. Governance ensures those explanations are consistent, reviewable, and aligned with evolving regulatory expectations. Together, they create systems people can trust, act on, and stand behind during audits.

This philosophy is deeply rooted in how CombineHealth approaches AI, shaped by its CEO, Sourabh’s experience building and deploying AI in industries where the margin of error is nearly zero.

Sourabh worked built AI systems at Goldman Sachs, in self-driving cars, and later at UpTrain, where explainability and governance weren’t optional. When he moved into healthcare, he saw the same stakes, but with one major difference: Hospitals didn’t have the same quality of technology.

Here’s what Sourabh shares about keeping accuracy as a north star when building CombineHealth’s solutions:

KPIs to Track Explainable AI Success in Healthcare RCM

Unlike traditional automation, the ROI of explainable AI shows up in validation efficiency, confidence, and defensibility, not just volume.

Below are the KPIs that matter most:

Return on Investment: Primary Metric

The clearest financial signal CFOs track is overall ROI.

Example: $3.20 returned for every $1 invested within ~14 months.

This return comes from faster throughput, lower rework, fewer denials, and reduced audit exposure—not from replacing staff outright.

Alert Validity Rate

Measures how often AI alerts are actually useful.

- Higher alert validity = fewer false positives

- Less time wasted reviewing “noise”

- Greater trust in AI recommendations

Touchless Claims Rate

Tracks the percentage of claims that move from coding to submission without human intervention.

- Explainability allows leaders to raise confidence thresholds safely

- Teams approve automation because they can review the logic, not just the outcome

- Higher touchless rates = lower cost to collect

Appeal Win Rate

Measures the effectiveness of denial appeals.

- Explainable AI ties appeal arguments directly to clinical evidence and payer rules

- Appeals are more targeted and defensible

- Win rates increase compared to generic, template-based appeals

What Technical Questions Must CFOs Ask to Expose “Fake” Explainability?

As explainable AI becomes a popular marketing claim, many RCM vendors now say their systems are transparent.

Here are the questions CFOs should ask and what the answers must reveal:

1. How was the model trained, and what data was used?

Ask for details about the training data set and training set, as understanding what data the model is trained on is crucial for assessing model behavior, feature importance, and trustworthiness—especially in healthcare.

The training data set forms the foundation for learning correlations and deriving insights, but it is important to distinguish correlation from causation.

Additionally, inquire about the test dataset used to evaluate model performance, as assessing the model on separate data is essential for understanding generalization, transparency, and building trust in AI decision-making processes.

2. Does the model train exclusively on our data, or is it pooled?

This question surfaces hidden risk around model behavior and compliance.

If a vendor pools data across customers:

- Your AI may learn from other organizations’ coding habits

- Bad practices can propagate silently

- You lose control over how the model evolves

CFOs should look for:

- Clear separation between customer data

- Transparent retraining and feedback mechanisms

- Explicit governance over what influences future decisions

3. Can we see the decision logic for a single claim?

This is the most important test of explainability.

Ask the vendor to walk through:

- One real claim

- One real decision

- With supporting documentation and applied rules

A truly explainable system can show:

- Which inputs mattered

- Which guidelines were applied

- How the final decision was reached

4. How do you detect and prevent Shadow AI usage?

Shadow AI is an overlooked risk in RCM environments.

Under pressure, staff may:

- Paste PHI into public AI tools

- Use unapproved extensions to draft appeals

- Bypass internal safeguards for speed

CFOs should ask:

- How does the vendor monitor unauthorized AI usage?

- Are safeguards in place to prevent PHI leakage?

- Is there an approved, auditable alternative for staff?

Further Reading: Evaluating an AI RCM billing and coding solution to ensure you ask the right questions before choosing a vendor.

Put Explainable AI to Work in Your RCM Operation

The real question for RCM leaders isn’t whether to use AI.

It’s whether you can understand, trust, and defend the decisions it makes.

Explainability in AI is what separates:

- Automation that quietly increases compliance risk

- From automation that strengthens revenue integrity

If you want to see what explainable, audit-ready AI actually looks like in real RCM workflows, book a demo with us.

FAQs

What is explainability in AI?

Explainability in AI is the ability of a system to clearly show why it made a specific decision, including which inputs, rules, or evidence influenced the outcome, in a way humans can understand, review, and validate.

How can interpretability improve trust in AI systems?

Interpretability lets users see how inputs affect outputs, reducing blind reliance. When people can follow the logic behind predictions, they’re more likely to trust, challenge, and responsibly adopt AI—especially in high-stakes decisions.

What are the different explainable AI methods?

Explainable AI methods include rule-based models, decision trees, feature importance analysis, post-hoc explanations (like SHAP and LIME), counterfactual explanations, and attention-based explanations that highlight influential inputs.

What are the most effective techniques for model interpretability?

Effective techniques include decision trees, linear models, SHAP values, LIME, partial dependence plots, feature attribution methods, and attention mechanisms—chosen based on model complexity, risk level, and audience needs.

What are the key differences between explainable and interpretable AI?

Interpretable AI focuses on understanding how a model works internally. Explainable AI focuses on explaining why a specific decision was made, even if the underlying model itself is complex or opaque.

.webp)